使用Kubeadm搭建Kubernetes集群

环境介绍

准备三台Linux机器(本文以Ubuntu20.04LTS系统为例),三台机器之间能相互通信。

| node | IP | memory |

|---|---|---|

| k8s-master | 192.168.153.132 | 4GB |

| k8s-worker1 | 192.168.153.133 | 4GB |

| k8s-worker2 | 192.168.153.134 | 4GB |

安装containerd, kubeadm, kubelet, kubectl

分别在三台机器中添加下面脚本,比如起名为install.sh

#!/bin/bash

echo "[TASK 1] Disable and turn off SWAP"

sed -i '/swap/d' /etc/fstab

swapoff -a

echo "[TASK 2] Stop and Disable firewall"

systemctl disable --now ufw >/dev/null 2>&1

echo "[TASK 3] Enable and Load Kernel modules"

cat >>/etc/modules-load.d/containerd.conf<<EOF

overlay

br_netfilter

EOF

modprobe overlay

modprobe br_netfilter

echo "[TASK 4] Add Kernel settings"

cat >>/etc/sysctl.d/kubernetes.conf<<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system >/dev/null 2>&1

echo "[TASK 5] Install containerd runtime"

mkdir -p /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

apt -qq update >/dev/null 2>&1

apt install -qq -y containerd.io >/dev/null 2>&1

containerd config default >/etc/containerd/config.toml

systemctl restart containerd

systemctl enable containerd >/dev/null 2>&1

echo "[TASK 6] Add apt repo for kubernetes"

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add - >/dev/null 2>&1

apt-add-repository "deb http://apt.kubernetes.io/ kubernetes-xenial main" >/dev/null 2>&1

echo "[TASK 7] Install Kubernetes components (kubeadm, kubelet and kubectl)"

apt install -qq -y kubeadm=1.26.0-00 kubelet=1.26.0-00 kubectl=1.26.0-00 >/dev/null 2>&1

执行脚本

sudo sh install.sh

脚本执行结束后,检查是否安装成功

kubeadm version

kubelet --version

kubectl version

1.26.0, 可以通过命令 apt list -a kubeadm 查看可用版本初始化master节点

master节点进行首先拉取集群所需要的images

sudo kubeadm config images pull

初始化Kubeadm

--apiserver-advertise-address这个地址是本地用于和其他节点通信的IP地址(即master节点ip地址)--pod-network-cidr地址空间

sudo kubeadm init --apiserver-advertise-address=192.168.153.132 --pod-network-cidr=10.244.0.0/16

最后一段的输出要保存好, 这一段指出后续需要的配置。

- 准备

.kube - 部署

pod network方案 - 添加

worker节点

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.153.132:6443 --token g6f2uw.v1hucu8kk5za3wi3 \

--discovery-token-ca-cert-hash sha256:b4c8fa25b072dcd61c796859187517d29b84b0604f47c93fb38b1c90d416dd12

最后两行最为重要,后面添加worker节点需要用到!!!

kubeadm join 192.168.153.132:6443 --token g6f2uw.v1hucu8kk5za3wi3 \

--discovery-token-ca-cert-hash sha256:b4c8fa25b072dcd61c796859187517d29b84b0604f47c93fb38b1c90d416dd12

1.按照上面的提示,首先创建.kube文件夹及相关配置

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

为了能够在zsh中使用,还需要添加配置到.zshrc

echo "export KUBECONFIG=$HOME/.kube/config" >> ~/.zshrc

source ~/.zshrc

2.部署pod network方案

这里选择kube-flannel的network方案,添加配置文件flannel.yml:

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- "networking.k8s.io"

resources:

- clustercidrs

verbs:

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: docker.io/flannel/flannel-cni-plugin:v1.1.2

#image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.2

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: docker.io/flannel/flannel:v0.21.2

#image: docker.io/rancher/mirrored-flannelcni-flannel:v0.21.2

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: docker.io/flannel/flannel:v0.21.2

#image: docker.io/rancher/mirrored-flannelcni-flannel:v0.21.2

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=ens33

resources:

requests:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

这里有两个地方需要注意,请自行修改:

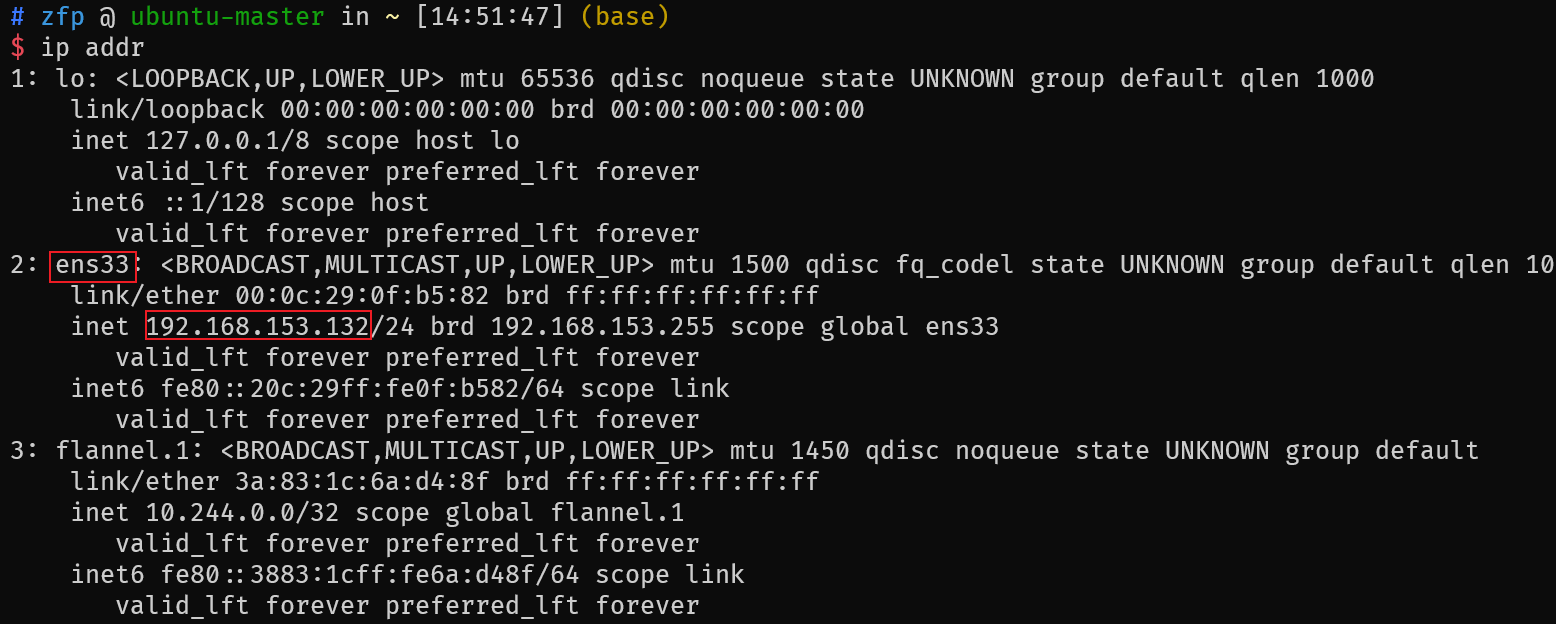

1.- --iface=ens33: iface后面的网卡名称需要和主机ip相对应

2.确保network是初始化kubeadm指定的地址空间: --pod-network-cidr=10.244.0.0/16

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

根据配置文件flannel.yml创建deployment

sudo kubectl apply -f flannel.yml

检查master节点是否成功

$ kubectl get node

NAME STATUS ROLES AGE VERSION

ubuntu-master Ready control-plane 15h v1.26.0

添加worker节点

install.sh安装kubeadm等环境使用前面在初始化master节点时保存的命令添加worker节点

kubeadm join 192.168.153.132:6443 --token g6f2uw.v1hucu8kk5za3wi3 \

--discovery-token-ca-cert-hash sha256:b4c8fa25b072dcd61c796859187517d29b84b0604f47c93fb38b1c90d416dd12

join的token和discovery-token-ca-cert-hash怎么办?查看token可以使用kubeadm token list命令

$ kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION

EXTRA GROUPS

g6f2uw.v1hucu8kk5za3wi3 8h 2023-02-22T15:34:44Z authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

discovery-token-ca-cert-hash可以通过openssl来获取

$ openssl x509 -in /etc/kubernetes/pki/ca.crt -pubkey -noout |

openssl pkey -pubin -outform DER |

openssl dgst -sha256

(stdin)= b4c8fa25b072dcd61c796859187517d29b84b0604f47c93fb38b1c90d416dd12

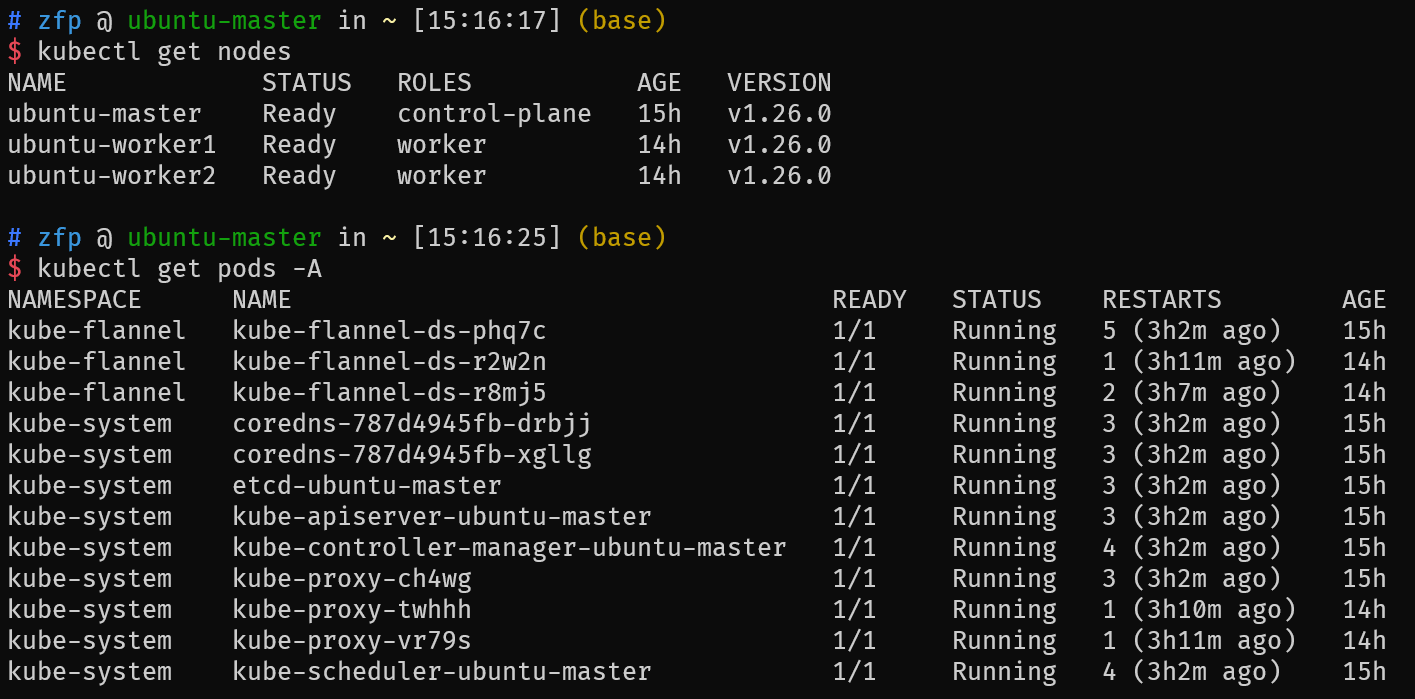

最后在master节点查看所有node和pod

kubectl get nodes

kubectl get pods -A

kubeadm集群验证

创建一个nginx的pod

$ kubectl run web --image nginx

pod/web created

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

web 1/1 Running 0 38s

给nginx pod创建一个service, 通过curl能访问这个service的cluster ip地址

$ kubectl expose pod web --port=80 --name=web-service

service/web-service exposed

$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15h

web-service ClusterIP 10.108.195.115 <none> 80/TCP 7s

$ curl 10.108.195.115:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

删除pod和service

$ kubectl delete service web-service

$ kubectl delete pod web

可能发生的问题与解决

1.error: error loading config file “/etc/kubernetes/admin.conf”: open /etc/kubernetes/admin.conf: permission denied

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

echo "export KUBECONFIG=$HOME/.kube/config" >> ~/.zshrc

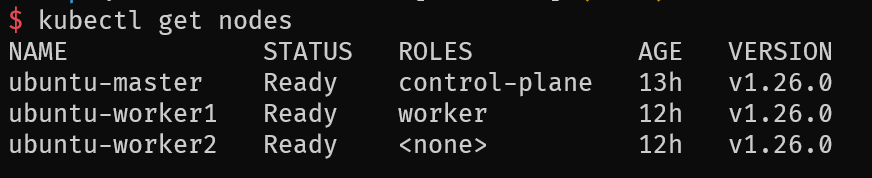

2.worker节点的ROLES显示<none>

使用下面的命令修改

kubectl label node 节点名称 node-role.kubernetes.io/worker=worker

3.节点的INTERNAL-IP不对

使用kubectl get nodes -o wide查看节点的INTERNAL-IP,发现不是以192开头的ip

查看自己的主机ip

$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UNKNOWN group default qlen 1000

link/ether 00:0c:29:0f:b5:82 brd ff:ff:ff:ff:ff:ff

inet 192.168.153.132/24 brd 192.168.153.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe0f:b582/64 scope link

valid_lft forever preferred_lft forever

修改文件/var/lib/kubelet/kubeadm-flags.env, 增加一个新的变量KUBELET_EXTRA_ARGS, 指定node ip是本机的ens33的地址,保存退出。

KUBELET_KUBEADM_ARGS="--container-runtime=remote --container-runtime-endpoint=unix:///var/run/containerd/containerd.sock --pod-infra-container-image=k8s.gcr.io/pause:3.7"

KUBELET_EXTRA_ARGS="--node-ip=192.168.153.132"

重启kubelet,就会发现本机master节点的INTERNAL-IP显示正确了。

sudo systemctl daemon-reload

sudo systemctl restart kubelet

kubectl get node -o wide

4.为zsh添加kubectl补全支持

# kubectl补全

source <(kubectl completion zsh)

echo "source <(kubectl completion zsh)" >> ~/.zshrc

# 重新加载zsh配置

source ~/.zshrc

5.提示配置文件已存在

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml]: /etc/kubernetes/manifests/kube-apiserver.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml]: /etc/kubernetes/manifests/kube-controller-manager.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml]: /etc/kubernetes/manifests/kube-scheduler.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-etcd.yaml]: /etc/kubernetes/manifests/etcd.yaml already exists

直接删除原有的配置:sudo rm -rf /etc/kubernetes/*

6.提示端口已占用

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Port-10250]: Port 10250 is in use

需要重启kubeadm

kubeadm reset